Abstract:

Lack of effective communication is present between the hearing and Deaf

and Hard-of-Hearing (DHH) communities due to the latter’s limited hearing and

speaking abilities. The use of American Sign Language (ASL) is one way both

communities can communicate, but only a few hearing individuals are fluent in this

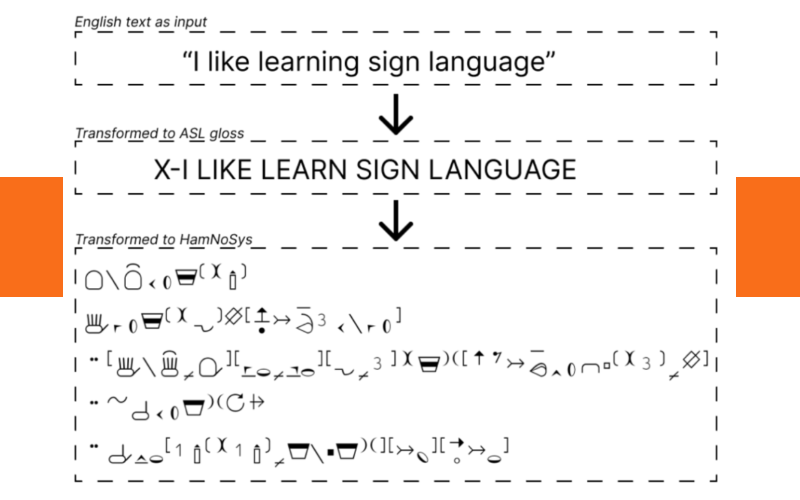

language. This study addresses this communication gap by translating English text

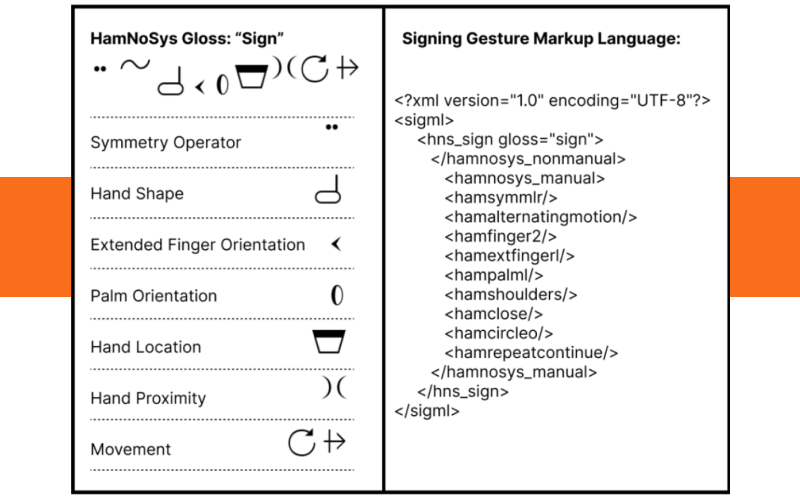

into ASL using a virtual human avatar. Three translation layers were utilized to

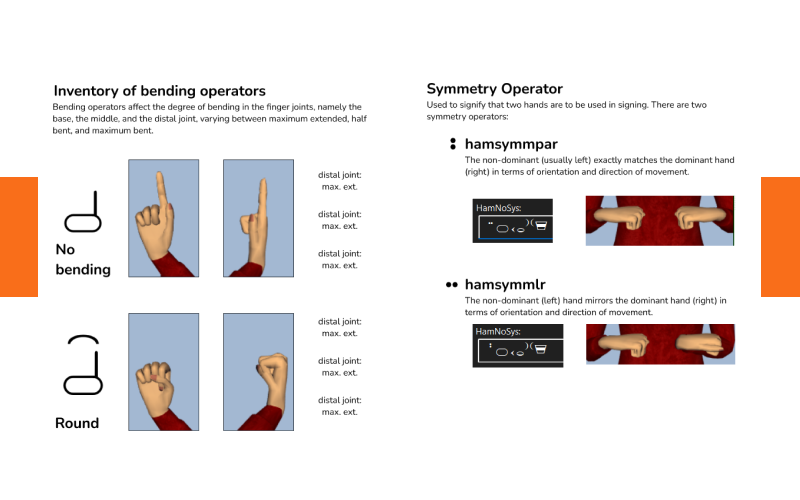

achieve this task: English to ASL Gloss, ASL Gloss to Hamburg Notation System

(HamNoSys), and HamNoSys to Signing Gesture Markup Language (SiGML). The

first translation layer used a Transformer model trained on the ASLG-PC12

dataset. A dictionary of 500 ASL Gloss words with their corresponding HamNoSys

was developed for the second translation layer, and the HamNoSys2SiGML

module was used for the third translation layer. The trained models were evaluated

using BLEU, ROUGE-L, and METEOR. Additionally, certified ASL interpreters and

DHH community members were assigned as human evaluators to assess the

model's output, verify the correctness of the developed corpus, and validate the

signing animations created by the signing avatar.

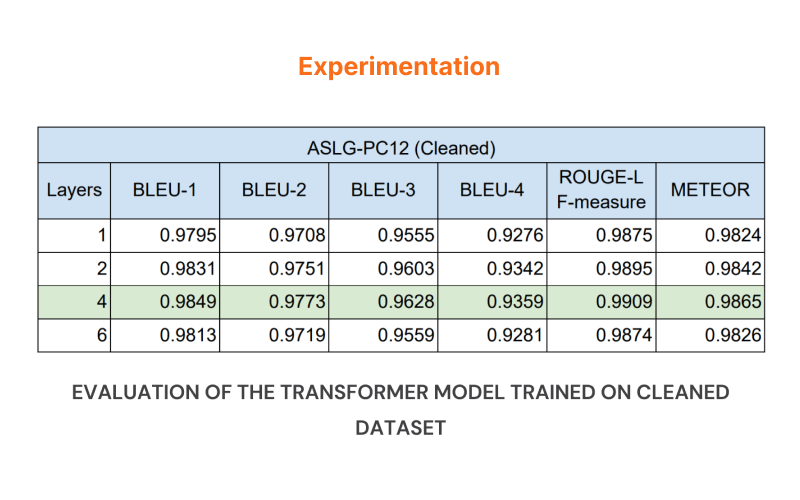

This study revealed that a 4-layer Transformer trained on a cleaned ASLGPC12 dataset generates a higher score across the evaluation metrics than models

trained with the original dataset. The developed corpus obtained a 77.8%

correctness rating. However, the output signing animations were not clearly

understood by the native ASL speakers in terms of sentence comprehension and

clarity due to the system's limited ASL vocabulary.

SignSpeak

- Category: Undergraduate Thesis

- Translating English Text to American Sign Language using Transformers and Signing Avatars

- Language: Python

- Completion date: April 16, 2024

Project Description

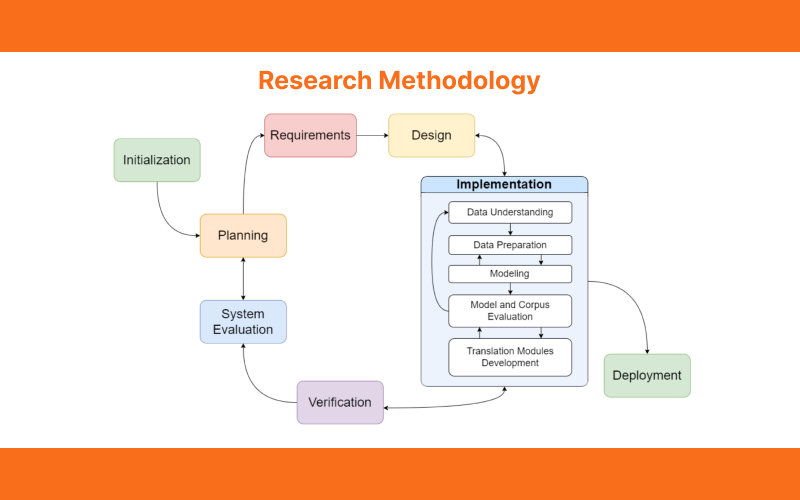

This study aims to develop a web-based application that converts English text into ASL through a virtual human avatar. An English text to ASL Gloss corpora was used for model training, and the ASLG-PC12 (American Sign Language Gloss Parallel Corpus 2012) corpus was used. Data preparation was first performed on the sentence pairs before proceeding to create a translation model using Neural Machine Translation (NMT), implementing the Transformer architecture. Machine validation of the transformer model is performed in terms of BLEU, METEOR, and ROUGE-L metrics. A Binary Search Tree function, HamNoSys2SiGML, and the CoffeeScript WebGL Signing Avatars (CWASA) platform are the modules used to translate English text into animated ASL.